- Learning Intelligence

- Posts

- OpenAI Just Went Open-Source

OpenAI Just Went Open-Source

How to use OpenAI’s new GPT-OSS model completely free and offline

OpenAI just released GPT-OSS, a new family of open-weight models you can run completely offline. This release includes two models:

→ GPT-OSS 120B matches GPT-o3 level reasoning and runs on high-end GPUs (80GB+ VRAM)

→ GPT-OSS 20B runs on laptops with just 16GB RAM, making it the most powerful local model for everyday use, being able to run in most computers.

In this tutorial, you’ll learn how to download, set up, and start using GPT-OSS 20B in minutes for completely free.

🧰 Who is this useful for:

Developers who want to build AI tools without relying on the cloud

Startups in healthcare, legal, or finance needing on-device privacy

Engineers building edge computing applications

Researchers needing offline, private experimentation with AI

Indie hackers tired of racking up API bills

STEP 1: Install LM Studio

LM Studio is a simple, powerful UI that lets you run open-weight models in a local chat environment.

This is the easiest way to run GPT-OSS on any OS.

To get started, head over to LM Studio and download its desktop app by just clicking on the download button.

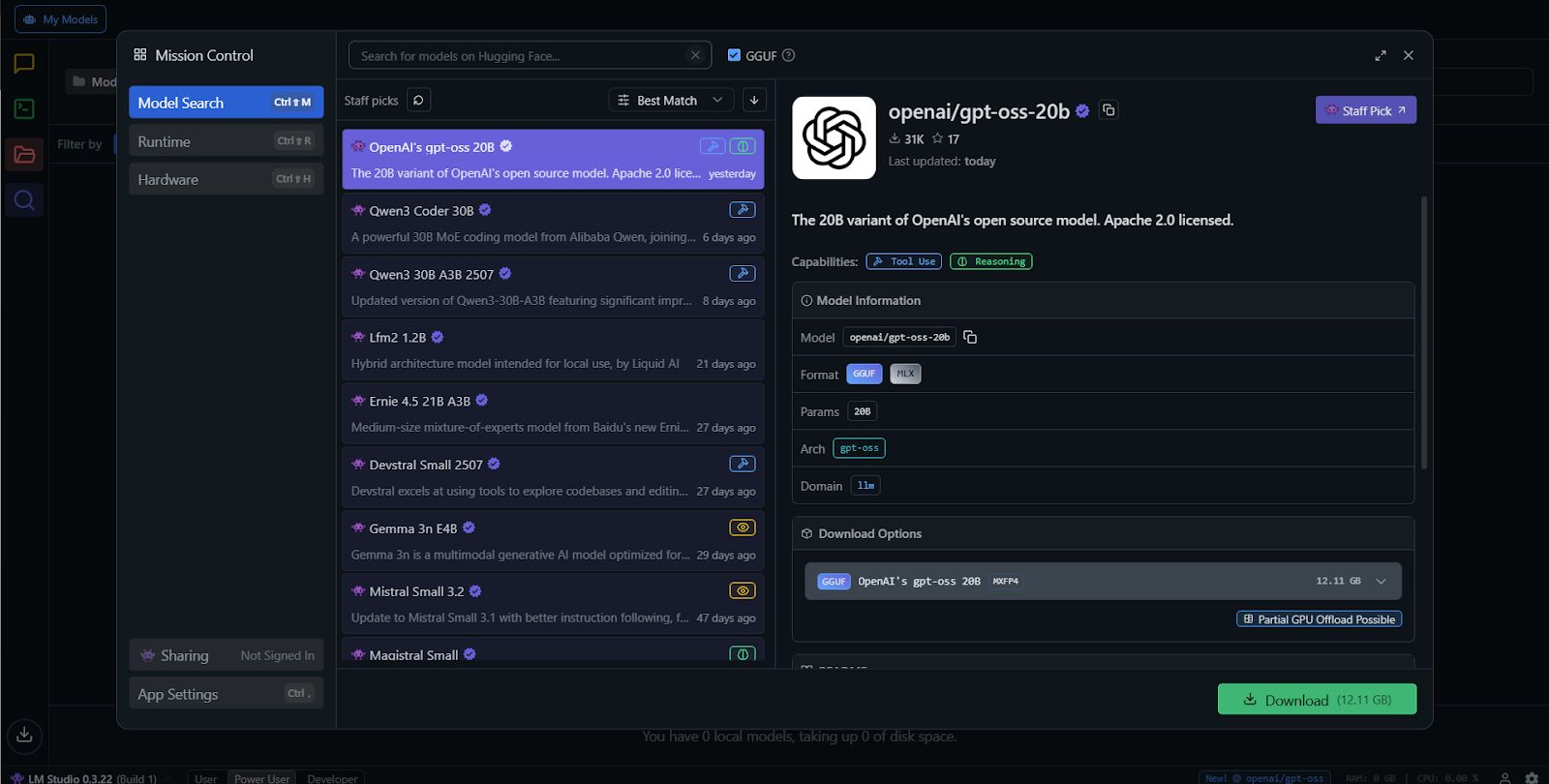

Once installed, You’ll land on the “Models” tab where you’ll be able to browse through available models, search by name, and track your downloads in real-time.

STEP 2: Download GPT-OSS 20B

Now in the Models tab, type GPT-OSS 20B into the search bar and select the model. Click Download, and LM Studio will do the rest.

The model is chunky (10–25GB), so grab a coffee while it downloads. Once it's in, it's yours forever.

STEP 3: Run It Locally

Once it is downloaded, Click into the “Chat” tab. You’ll see a model selector at the top. Pick GPT-OSS 20B from your local list.

And that's it

You can now type a prompt and set the Reasoning Effort by clicking on the slider labeled “Reasoning Effort" (This lets you control how deeply the model thinks before responding).

And now you are good to go.